¿Supervisión de datos a través de múltiples fuentes o una solución central?

Para muchas o incluso la mayoría de las operaciones a gran escala, los permisos exigen que se controlen muchos medios diferentes (por ejemplo, el aire, el suelo, las aguas superficiales o subterráneas y el ruido). A menudo, estos datos deben combinarse con otros complementarios (por ejemplo, de producción o meteorológicos) y con metainformación (por ejemplo, caudales, altura, ubicación).

Se aplican distintas frecuencias a distintos flujos de datos, distintos niveles de cumplimiento, cálculos, etc.

A menudo, los equipos de control vienen con su propio software, pero éste rara vez trata de combinar sus datos con los de todos los demás equipos de la planta, las lecturas de campo y los informes de laboratorio, etc. - tampoco gestionan muchos de los demás requisitos de los permisos, como la gestión de los calendarios de control, el análisis de los conjuntos de datos cotejados o la elaboración de informes. Estos silos de información son a menudo diferentes entre sí, con distintas interfaces y capacidades.

La integración de datos consiste en combinar datos procedentes de distintas fuentes y ofrecer a los usuarios una visión unificada. Un sistema integrado resuelve muchos de los problemas inherentes a los silos de información. Pueden;

- Mantener y gestionar todas las partes clave del permiso relativas a la vigilancia.

- Maneje todo el plan de monitoreo.

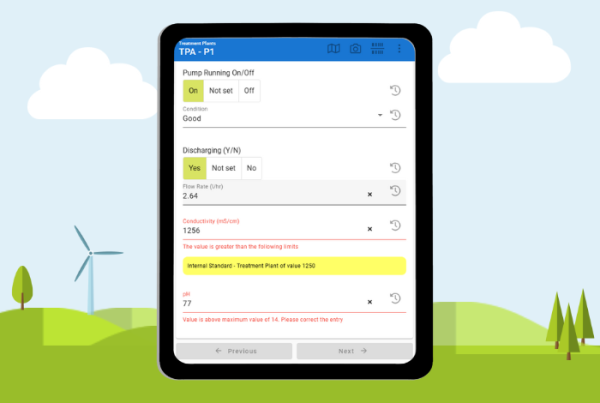

- Recoger, validar y alertar de los problemas.

- Combine, coteje, agregue y calcule diferentes conjuntos de datos.

- Proporcionar una plataforma única y uniforme para analizar y comunicar todos los datos de vigilancia.

- Permite compartir información a través de una organización de forma controlada y segura.

- Evitar muchos casos de error humano mediante el copiado y el pegado de un sistema a otro y en, por ejemplo, hojas de cálculo.

¿Cómo deben organizarse los datos de vigilancia medioambiental?

Con tantos conjuntos de datos diferentes, ¿cómo es posible combinarlos en una única interfaz? Esto requiere mucha habilidad y experiencia. Sin embargo, con las herramientas adecuadas, puede hacerse con prácticamente cualquier tipo de datos de seguimiento.

La automatización también es vital. Lo ideal sería que un sistema minimizara al máximo la necesidad de que los usuarios lo poblaran continuamente con datos y arreglaran los problemas de importación.

Recogida de datos

¿Cómo se recogen y almacenan sus datos hoy en día? En la mayoría de los casos, cualquier organización dispondrá de numerosos registradores o sensores diferentes, resultados de laboratorio y datos de producción. Cómo se gestionan hoy todos estos datos, lo más probable es que sea en hojas de cálculo o bases de datos propias. Para gestionar adecuadamente los datos medioambientales, es necesario cotejar todas estas fuentes diferentes en una ubicación manejable.

Disposición

Los archivos procedentes de múltiples silos diferentes tendrán inevitablemente casi tantos diseños diferentes como fuentes de datos, y normalmente no es práctico ni posible buscar ninguna coherencia entre ellos.Cosas como la información del encabezado, los nombres de las columnas, el orden de las columnas, la orientación del archivo (si cada columna es un parámetro diferente en un diseño de tabulación cruzada, o tal vez encabezados de puntos de muestra) podrían constituir una larga lista. Por lo tanto, el importador debe ser inteligente y flexible. Debe ser capaz de leer la mayoría de los archivos sin que el usuario introduzca datos, de interpretar el orden y la orientación de las columnas, etcétera. A veces esto no será suficiente, por lo que el usuario debe poder configurar casos especiales e insertar secuencias de comandos o macros personalizadas para gestionar los diseños más complicados.

Casos especiales

Muchos flujos de datos tienen casos o reglas especiales que deben aplicarse. Algunos equipos pueden proporcionar fechas estadounidenses. Es imprescindible que el importador lo sepa y sepa cómo manejarlo. ¿El 08/07/2018 es agosto o julio?

Hay muchos formatos de fecha que varían - 01/02/2018 2018-01-02 01-Feb-2018 y así sucesivamente.

Muchos archivos .csv tienen separadores de columna de comas, pero se pueden encontrar otros delineadores como punto y coma o tabulador. El importador necesita saber qué esperar.

A otros archivos les pueden faltar columnas. Por ejemplo, un medidor de flujo sabe de qué medidor se trata y puede no incluir su nombre en el archivo de salida. Esto tendría que ser especificado al importador, o "todos los archivos que llegan a la carpeta is son de x laboratorio", etc.

With laboratory readings, < symbols are common (e.g. <0.01). They simply mean ‘below detection limit’. This doesn’t mean zero, but different regulators have different requirements for their handling. Is it the full amount up to the detection limit, half of it or zero? Your importer needs to know which in order that it can interpret not just as text, and post the correct number in the database for use in interpretation, but also as a text for reports.

Los separadores de decimales de coma (por ejemplo, 0,01) son comunes especialmente en Europa continental o en los países de América Latina. El importador debe entender que no es un separador de columna sino un "punto" decimal.

Así que en la configuración, el usuario o el administrador debe tener opciones y flexibilidad sobre cómo configurar cualquier flujo de datos.

Coherencia

La asignación de nombres puede ser un gran problema si no se hace correctamente. Diferentes equipos pueden llamar a la misma ubicación de forma diferente, por ejemplo, "canal 1" puede identificar la misma ubicación como "pila 1", o personas que registran manualmente un nombre de forma diferente, por ejemplo, BH1 BH01, sondeo 1. Todos ellos deben combinarse, y el importador tiene que conocer las variantes. Un buen importador aprenderá estos alias con el tiempo y guardará las correspondencias para que sólo tenga que preguntar una vez.

Formato

¿Puede su sistema gestionar varios formatos de archivo, por ejemplo, archivos CSV, hojas de cálculo Excel o archivos de texto?

Cálculos

La agregación y el cálculo automáticos a menudo no son tan sencillos como podría parecer. Tomemos el ejemplo aparentemente sencillo de un límite de cumplimiento de mg/m3. Puede haber un sensor que mida mg/l y un caudalímetro que mida m3/minuto, ambos a intervalos diferentes. El calculador debe saber dónde buscar en el tiempo para encontrar la lectura aplicable más cercana. O reglas sobre cómo tratar un contador que da vueltas y vuelve a cero. Una vez obtenido el resultado, puede que haya un nuevo conjunto de datos que ahora necesite una comprobación de conformidad (y una alerta, si procede). Son muchas las complicaciones que hay que resolver.

Para concluir, existen muchas razones para integrar sistemas medioambientales y hemos enumerado las dificultades más comunes. Deje que los expertos le ayuden a conseguir una implantación fluida y ágil e impulse su empresa con inteligencia medioambiental fácil de usar.

Acerca de MonitorPro

La completa y profesional monitorización de datos medioambientales MonitorPro es la solución informática en la que confían los equipos medioambientales de todo el mundo para gestionar su cumplimiento medioambiental y la recopilación de datos. MonitorPro es una solución basada en web o alojada localmente para la recopilación de TODAS las fuentes de datos medioambientales, generados por registradores, laboratorios, estaciones meteorológicas, cálculos u otros repositorios de datos.nes meteorológicas, cálculos u otros repositorios de datos. MonitorPro es la primera solución de software de medio ambiente, salud y seguridad que ha recibido la acreditación acreditación MCERTS de la Agencia de Medio Ambiente.

Acerca de los datos del EHS

EHS Data es una empresa de software líder en el Reino Unido que ofrece soluciones de gestión de datos medioambientales a más de 1.000 centros en 40 países de todo el mundo. Durante más de 20 años, nuestra versátil solución de software, MonitorPro, ha ayudado a las organizaciones a ahorrar tiempo, mejorar la planificación, el control de calidad, el análisis de emplazamientos y la elaboración de informes para gestionar las obligaciones medioambientales y la sostenibilidad.

ISO 27001

EHS Data cuenta con la certificación ISO 27001.

A Carbono de carbono

En 2020, EHS Data alcanzó el estatus de carbono negativo y se compromete a mantener este estatus cada año.